The Generative-X Era and Collaboration Dilemma

November 30, 2022, The impact of ChatGPT on industries around the world over the past year has been tremendous. Unlike IT automation technology, which mainly affected physical tasks, generative AI is making a huge impact in knowledge service industries such as program development, research, education, law, healthcare, culture, and design, and is ushering in the era of Generative-X, which generates images, videos, programs, designs, and products.

In particular, the automation and intelligence of time-consuming knowledge worker tasks such as documentation, research, design, and development are improving work efficiency and positively impacting productivity. The most popular application of dual generative AI is in program development, where developers are particularly adept at using generative AI such as ChatGPT.

According to the State of the Developer Ecosystem 2023 report by developer tools company JetBrains, which surveyed 26,348 developers across 196 countries, 77% of developers worldwide are using ChatGPT, while only 3% have never used ChatGPT. "The field of program development will be left with only advanced and entry-level developers, and the middle will be replaced by generative AI," IT experts say.

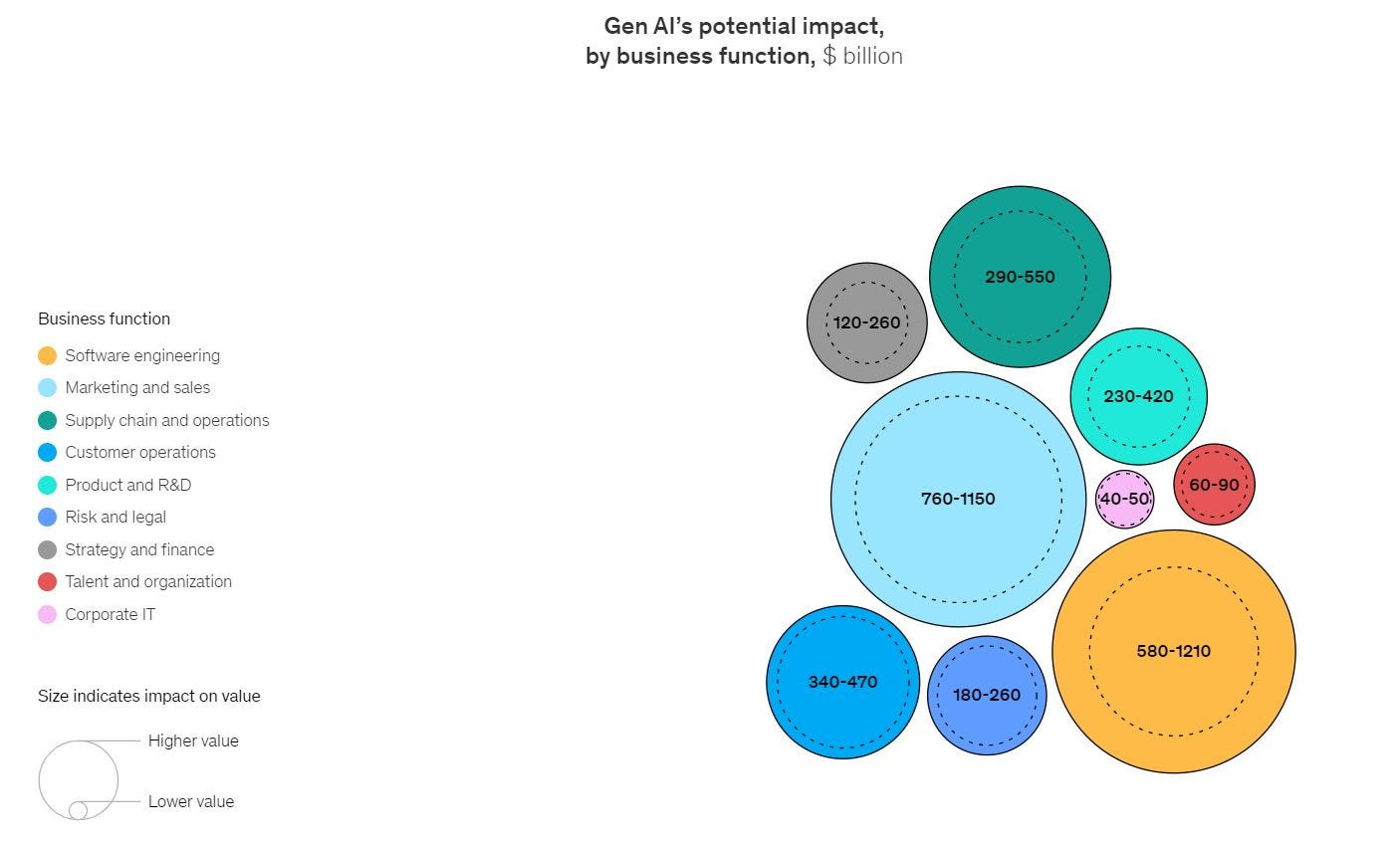

Assessments of the economic impact of generative AI are also positive. McKinsey has estimated that generative AI could add up to $4.4 trillion in additional economic value annually to the global economy, and Goldman Sachs has announced that it has raised its long-term economic growth forecasts for major countries around the world, including the U.S., because it predicts that advances in AI will significantly improve productivity, accelerating economic growth.

Gen AI's potential impact,by business function, $ billion, Source: McKinsey & Company

However, the rapid development of artificial intelligence is also causing anxiety. The five-day coup of the OpenAI board in November 2023 was also driven by some board members' concerns about the rapid development of AI. Some OpenAI board members, including OpenAI co-founder and chief scientist Sutzkeber, who supported Sam Altman, argued that GPT should take its time before evolving into AGI (general-purpose artificial intelligence), and that the pace of development should be slowed down until it can be proven that AI will not harm existing social norms by applying the principles of regulation and precaution, but the majority of OpenAI employees supported Sam Altman.

"The OpenAI debacle is a realization of fears that commercial forces are working against the responsible development of AI technology, and it's time to rethink how we regulate AI companies and technologies going forward.”

- Nature -

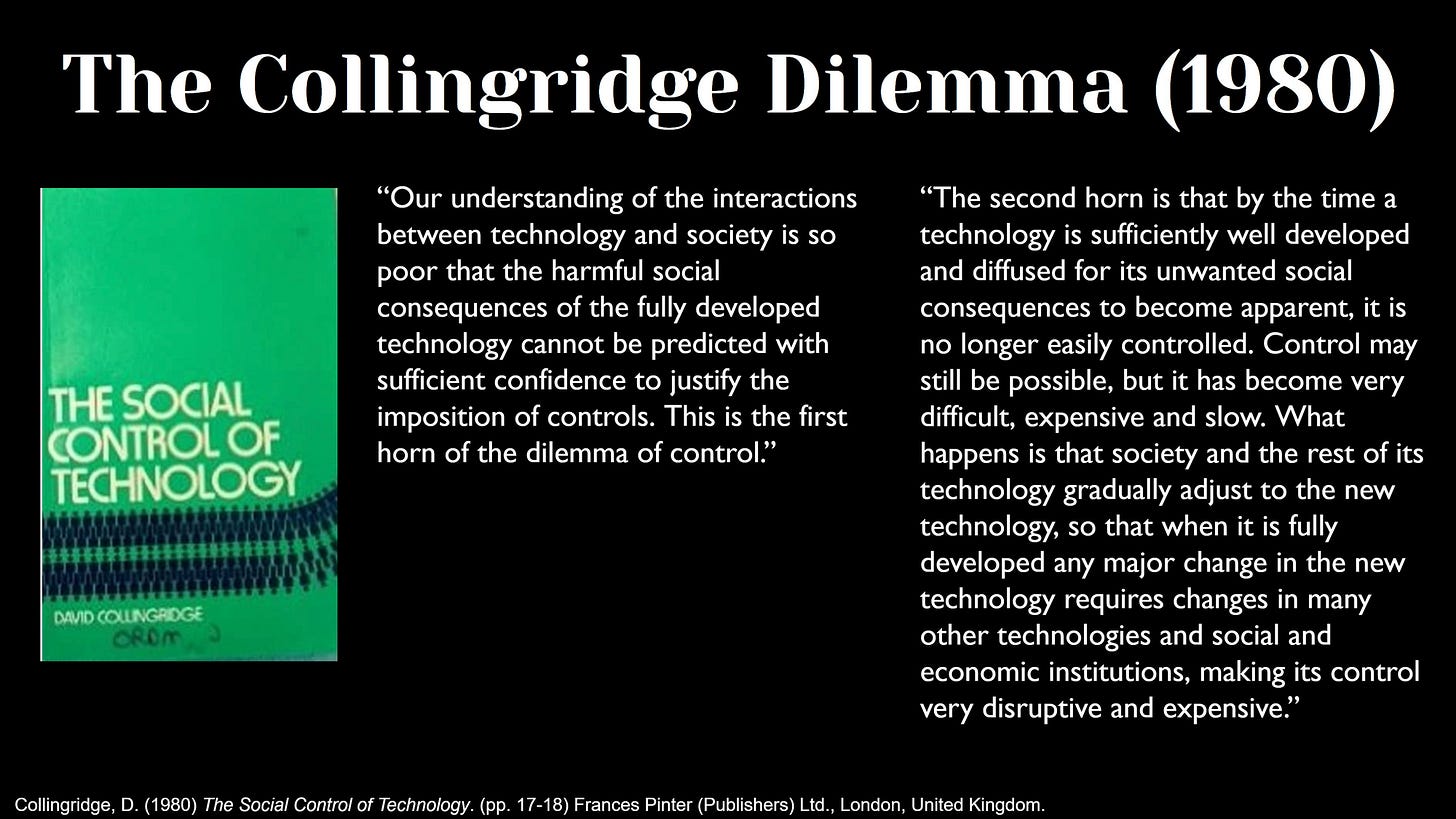

So where does the priority lie between technological progress and social safety? In his 1980 book The Social Control of Technology, Professor David Collingridge describes this issue through the lens of Collingridge's dilemma. Collingridge's dilemma is the notion that it is relatively easy to regulate a technology in its early stages, before it has developed into something concrete, but that it becomes much more difficult to enforce social control when the technology is deployed and widely disseminated and the effects of the technology are known. The answer is: "AI development should continue, but with appropriate social controls in place before it's too late."

In the wake of the failed open AI boardroom coup, the debate on regulating AI technology is expected to intensify, and governments are moving faster to regulate AI. In June 2023, the EU announced plans to require companies that create generative AI, such as ChatGPT, to disclose the source and copyright of the data used for training, and to seek EU approval before launching their services. The AI law will take two years to implement, once it is approved by the European Parliament and member states, and companies that violate the law will face fines of up to €35 million. However, the feasibility of the law is still questionable as we do not know what issues will emerge in the AI industry during the two-year period.

Reporters Without Borders also published the Paris Charter for Artificial Intelligence and Journalism, which sets out ethical principles for journalists' use of AI, including "prioritizing ethics in media's technological choices," "maintaining human editorial authority," and "subjecting AI to independent external evaluation.